I find it useful to know how well calibrated my predictions are: of the events to which I assign 80% probability of occurence, how many of them occur?

If a person is a well-calibrated predictor, then things that they say happen 50% of the time do in fact happen about 50% of the time.

Calibrating yourself is an exercise in at least two different activities.

First, when attempting to become well-calibrated, you're forced to take a second glance at your own predictions. I keep a personal prediction book (for purposes of self-calibration), and there's this moment between saying a prediction and going to write the prediction down where I introspect and make sure that I actually believe my own prediction.

For example, if I say to someone "80% chance I'll make it to the party by 20:00", then this triggers an impulse to write down the prediciton, which triggers an impulse to check whether the prediction is sane. Am I succumbing to the planning fallacy? How surprised would I actually be if, at 19:45, I was neck-deep in some other project and decided not to go? Keeping a personal prediction book gives me an excuse to examine my predicitons on more than just a gut level.

The second thing that self-calibration is about is figuring out how those subjective feelings of likelihoodness actually attach to numbers. I, at least, have an internal sense of likelihood, and it takes a little while to look at a lot of events that are "eh, kinda likely but not too likely" and figure out how many of them actually happen.

For that, I use tools like the Calibration Game android app, which is not the prettiest app, but does a good job at helping me map the feelings onto the numbers.

However, I have a problem when self-calibrating, both with a personal prediction book or with the Calibration Game app. That problem is that these games provide me with perverse incentives.

My goal with these tools is to improve my calibration. I would also like to improve my accuracy, of course, but I tend to do that using other methods (e.g., various epistemic rationality techniques). I use a personal prediction book and an android app specifically with the goal of becoming better calibrated.

And the problem is, it's actually pretty easy to trade off accuracy for calibration in these games. If you're playing for calibration, the incentive structure is perverse.

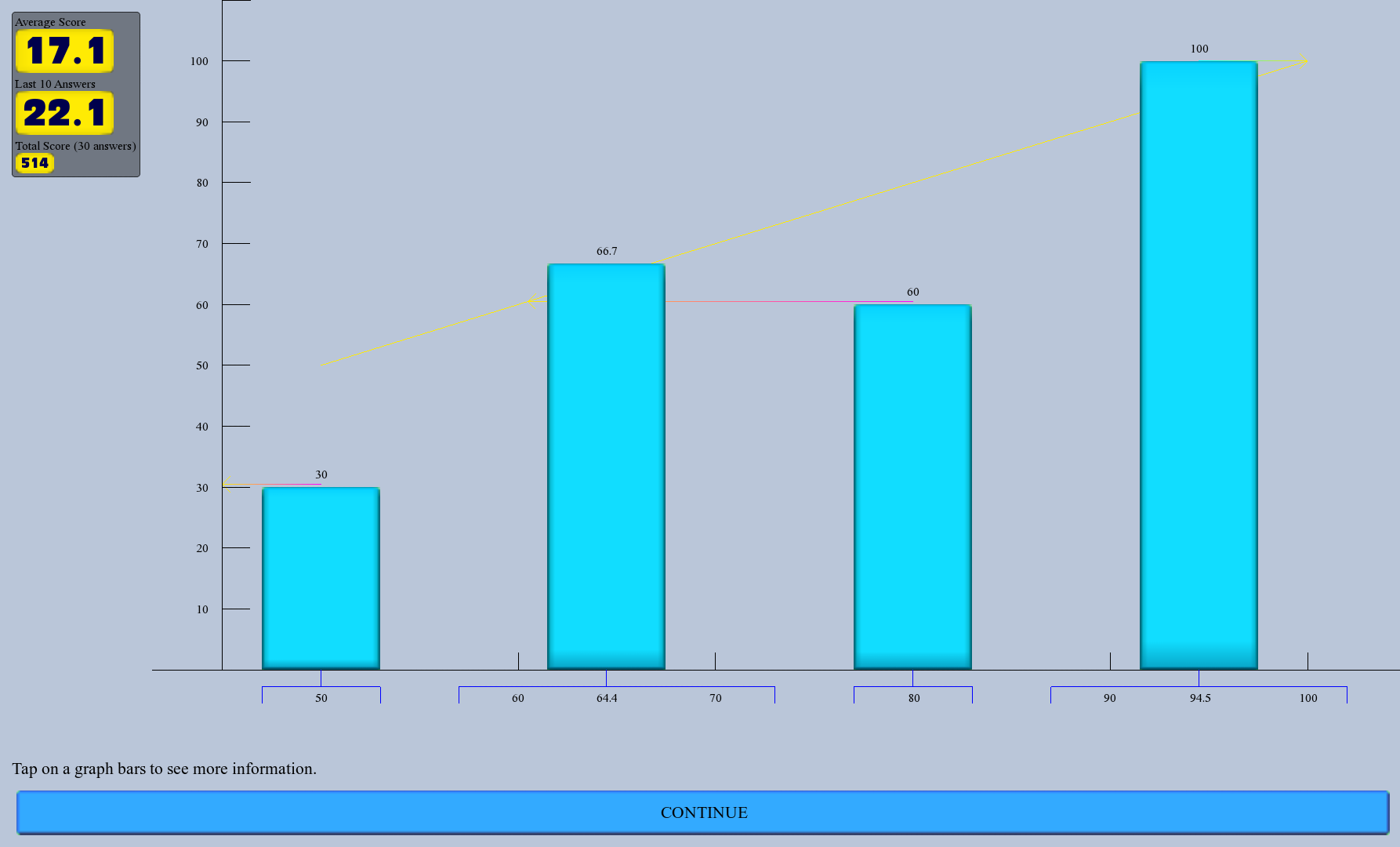

For example, let's say that I've been playing the calibration game for a little while and you have a graph that looks like this:

This graph shows an early point in the game, at which point there is some overconfidence in both the 80% range and the 50% range.

Now, if you want to maximize your accuracy score (the number in the top left corner) then no matter what your calibration graph looks like, you should keep answering to the best of your ability, using the information in the above graph. For example, you might downgrade the probability of events that felt like "~80% likelihood" to ~60%. (Actually, this graph shows a game where there have been only 30 questions, so I'd suggest playing a while longer before adjusting your labels.)

However, if you're trying to just improve your calibration (according to the game) then you can just take a few things that you're 90% certain of and call them 80% certain, to improve your numbers in the 80% region.

This is a perverse incentive, but it's really hard to stop my brain from doing it when I'm not paying attention. My autopilot is really aesthetically pleased by a calibration graph that lines up nicely with the Line Of Good Calibration, and my inner reasoner affirms that this is a laudable goal, so if I play the Calibration Game while zoning out then I'm liable to throw easy questions one way or another in order to improve my calibration.

To a lesser extent, this behavior extends to my personal prediction book. If I've been doing really poorly in the 80% bin (e.g, only half of my 80% predictions have come true) then there's both an incentive to be more careful about what I assign 80% probability to (which is good), but there's also a perverse incentive to take something that I think is >90% likely and record it under the "80% likely" header, in order to make the calibration numbers look right.

I am (obviously) aware of this impulse, and I like to pretend that I'm pretty good at suppressing it, but let's not kid ourselves: for every time you catch yourself acting biased, there are probably half a dozen instances you missed.

And frankly, I don't think that more vigilance and self-control is the answer. My deep seated desire to Get All The Points is something that I want to work with me, not against me. The problem here is the perverse incentives: I'm trying to improve my calibration, and there's an easy way to appear really well calibrated so long as I don't care about the accuracy score.

I could start ignoring calibration and caring only about the accuracy score, and the right answer here might be "find tools that make the accuracy score much more prominent and tell yourself you're training accuracy, and then you'll improve your calibration as a side effect".

But before resorting to that, it does seem like there might be clever calibration-training games which avoid these perverse incentives. Anyone have ideas? If I'm trying to maximize calibration (and not necessarily accuracy), is there a game that always incentivizes me to accurately report my beliefs?